Are your functions overly distrustful? We’ll see just how the Null Object Pattern can restore a culture of trust and cut down flow control bugs on today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

We just hit 100 subscribers, and I want to thank you so much for watching, sharing and subscribing! This series is based on problems faced in real-world consulting projects, so each episode is designed to teach powerful patterns that save you and your team time. But that takes a lot of time! Each 5–7 minute episode takes around 30 hours from inception to release.

So I want to ask you to consider supporting me on Patreon so I can keep crafting great content that helps you craft exceptional code. Think of it as a pay-what-you-like screencast subscription.

Alright, on to today’s pattern.

When each line of code has to defend against previous lines, we’re left with a tangle of branching and flow control constructs, like if…else and early returns. This is especially tricky for error handling code, which can turn an otherwise docile function into a flow control nightmare.

Today we’re working on authentication middleware that’s taken from a chapter in my book, Functional Design Patterns for Express.js. If you’re hopping into a Node backend and have loved the design-oriented approach we take on TL;DR, I encourage you to check it out.

We’re making authenticated HTTP requests to an Express backend and supplying a special token called a JSON Web Token. If you’re new to JWTs, think of them as the picture page of a passport: they encode details about the user, and are securely signed so the backend knows they’re authentic.

makeRequestWithToken('g00d_t0k3n');

// => '✅ 200: Welcome to the backend.'

makeRequestWithToken('3mpt1_t0k3n');

// => '🚫 401: Bad token.'

makeRequestWithToken('0ld_t0k3n');

// => 💥 TokenExpiredError: jwt expired

makeRequestWithToken('f@k3_t0k3n');

// => 💥 JsonWebTokenError: jwt malformed

As long as the token is valid, the backend lets us use any of its APIs. But if the token is missing some information, has expired, or has been tampered with, the backend halts the request in its tracks.

The function responsible for this guard behavior is a middleware function called checkToken():

let checkToken = (req, res, next) => {

let payload = jwt.verify(req.token, 's3cr3t');

if (payload && payload.user) {

req.user = payload.user;

} else {

res.status(401).send('Bad token.');

return;

}

next();

};

It tries to decode the contents of the JSON Web Token, called the payload. If the token is successfully decoded, it stores the user information on the request object and invokes next() to continue. But if the token is bad, it halts the request and immediately responds with a 401 Unauthorized status code.

But a lot of other things can go wrong. A client could supply an expired token, or they might tamper with it; in either case, the jwt.verify() function throws an exception. Right now, the checkToken() function is completely oblivious to these potential errors.

We should never allow a known exception to go uncaught, otherwise the backend’s response will just hang. So instead, we need to catch any JWT-related errors and respond with a 401 status code.

let checkToken = (req, res, next) => {

- let payload = jwt.verify(req.token, 's3cr3t');

+ let payload;

+ try {

+ payload = jwt.verify(req.token, 's3cr3t');

+ } catch (error) {

+ /* Suppress the error */

+ }

if (payload && payload.user) {

...

};

To do that, we can wrap try…catch around the verify() call. But as we learned in the last two episodes, an unqualified catch is almost always a bug. We must only catch error types we intend to handle. We’ll use an if…else statement to rethrow the error if it isn’t a TokenExpiredError or JsonWebTokenError.

let checkToken = (req, res, next) => {

let payload;

try {

payload = jwt.verify(req.token, 's3cr3t');

} catch (error) {

if (error instanceof TokenExpiredError

|| error instanceof JsonWebTokenError) {

/* Suppress the error */

} else {

throw error;

}

}

if (payload && payload.user) {

req.user = payload.user;

} else {

res.status(401).send('Bad token.');

return;

}

next();

};

makeRequestWithToken('g00d_t0k3n');

// => '✅ 200: Welcome to the backend.'

makeRequestWithToken('3mpt1_t0k3n');

// => '🚫 401: Bad token.'

makeRequestWithToken('0ld_t0k3n');

// => '🚫 401: Bad token.'

makeRequestWithToken('f@k3_t0k3n');

// => '🚫 401: Bad token.'

This is the correct way to handle all these edge cases, but now checkToken() is swimming in flow control constructs: early returns, try…catch, throw, and an unhealthy dose of if…else statements too. And sadly, this style is typical of most popular middleware libraries.

Each line of code is constantly on guard, as though it can’t trust the lines before it. So how do we nuke these flow control constructs?

Last episode we derived a helper called swallow() that could help. swallow() is a higher-order function that runs some code that could potentially blow up. If it does, it suppresses the error and instead returns the result of another function.

let swallow = (type) => (fail) => (fn) => (...args) => {

try {

return fn(...args);

} catch (error) {

if (!(error instanceof type)) { throw error; }

return fail(error);

}

};

let safeFindBlog = swallow(NotFound)(

() => 'Missing blog'

)(unsafeFindBlog);

unsafeFindBlog({ id: 5 });

// => { title: 'I 😍 JS' }

unsafeFindBlog({ id: 100 });

// => 💥 NotFound

safeFindBlog({ id: 100 });

// => 'Missing blog'

Let’s try using swallow() in place of the try…catch and if…else statements. If jwt.verify() throws a TokenExpiredError, we’ll catch it and instead return null to make it mirror the old behavior.

let checkToken = (req, res, next) => {

let payload =

swallow(TokenExpiredError)(

() => null

)(

() => jwt.verify(req.token, 's3cr3t')

)();

if (payload && payload.user) {

...

};

Since swallow() is a higher-order function, we can also catch a JsonWebTokenError by composing it with another swallow().

let checkToken = (req, res, next) => {

let payload =

+ swallow(JsonWebTokenError)(

+ () => null

+ )(

swallow(TokenExpiredError)(

() => null

)(

() => jwt.verify(req.token, 's3cr3t')

+ )

)();

if (payload && payload.user) {

...

};

This is horrible to read, but it behaves correctly and removed several flow control constructs. What about the remaining conditionals? It would help if we could go ahead and destructure the payload’s user property. Then the following code could be less defensive about the shape of payload.

Well if a TokenExpiredError is thrown, swallow() will return null, which isn’t an object and can’t be destructured. So what if instead of returning null, we returned a benign value that has the shape of a valid payload, such as an object with a user property? Then even if an exception is thrown, we can be sure that the payload will have the right shape.

let checkToken = (req, res, next) => {

- let payload =

+ let { user } =

swallow(JsonWebTokenError)(

- () => null

+ () => ({ user: null })

)(

swallow(TokenExpiredError)(

- () => null

+ () => ({ user: null })

)(

() => jwt.verify(req.token, 's3cr3t')

)

)();

- if (payload && payload.user) {

+ if (user) {

- req.user = payload.user;

+ req.user = user;

} else {

...

};

By substituting a benign value as early as possible, we don’t have to be defensive later on. In Object-Oriented Programming, this benign value is called a Null Object. It’s often a subclass of the expected object type, and should respond to the same messages.

class User {

constructor({ id, name, email }) {

this.name = name;

this.email = email;

this.id = id || generateId();

}

}

class NullUser extends User {

constructor() {

super({

id: '00000000',

name: 'NullUser',

email: '[email protected]'

});

}

}

Since we’re taking a more functional approach, we won’t create a Null Object class, but we can still lift this Null Object into a variable called nullPayload to better communicate intent.

let nullPayload = { user: null };

I use this pattern so often, I like to create a utility called rescueWith() that behaves exactly like swallow(), except that we don’t need the extra function wrapping around the nullPayload.

let rescueWith = (type) => (fallback) =>

swallow(type)(() => fallback);

let checkToken = (req, res, next) => {

let { user } =

rescueWith(JsonWebTokenError)(nullPayload)(

rescueWith(TokenExpiredError)(nullPayload)(

() => jwt.verify(req.token, 's3cr3t')

)

)();

if (user) {

...

};

That helps cut down the syntactic noise, and once we move the arguments for jwt.verify() to the end:

let checkToken = (req, res, next) => {

let { user } =

rescueWith(JsonWebTokenError)(nullPayload)(

rescueWith(TokenExpiredError)(nullPayload)(

jwt.verify

)

)(req.token, 's3cr3t');

if (user) {

...

We now see the entire function can be extracted from checkToken() altogether! Let’s call it safeVerifyJWT since it works exactly like jwt.verify() but just replaces errors with a safe value.

let safeVerifyJWT =

rescueWith(JsonWebTokenError)(nullPayload)(

rescueWith(TokenExpiredError)(nullPayload)(

jwt.verify

)

);

let checkToken = (req, res, next) => {

let { user } = safeVerifyJWT(req.token, 's3cr3t');

if (user) {

...

Finally, let’s whip out our compose() helper to remove the nesting.

let safeVerifyJWT = compose(

rescueWith(JsonWebTokenError)(nullPayload),

rescueWith(TokenExpiredError)(nullPayload),

)(jwt.verify);

This refactor has helped us discover the boundary we should have seen all along: all that try…catch and if…else nonsense was just about making a version of jwt.verify() that behaved a little differently — just the sort of thing higher-order functions do so well.

And now checkToken() is back to focusing on the naive happy path. With all the noise out of the way, we can confidently reason that next() will only be called if there’s a user, so we can move it into the if clause and eliminate the early return in the else. This code now has zero flow control constructs!

let checkToken = (req, res, next) => {

let { user } = safeVerifyJWT(req.token, 's3cr3t');

if (user) {

req.user = user;

+ next();

} else {

res.status(401).send('Bad token.');

- return;

}

- next();

};

Optionally, we can even rewrite the remaining if…else statement into a ternary expression to prohibit any flow control constructs at all. But whether or not you use the ternary, the final checkToken() function reads nicely thanks to small, well-behaved functions and a predictable flow.

let nullPayload = { user: null };

let safeVerifyJWT = compose(

rescueWith(JsonWebTokenError)(nullPayload),

rescueWith(TokenExpiredError)(nullPayload),

)(jwt.verify);

let checkToken = (req, res, next) => {

let { user } = safeVerifyJWT(req.token, 's3cr3t');

return user

? (req.user = user, next())

: res.status(401).send('Bad token.');

};

We’ve been building up to this refactor for a few episodes, but by letting things get ugly instead of skipping directly to rescueWith(), we saw how composition always wins in the end — even if the process seems to produce more code.

And that journey helped us identify and solve the underlying problem: trust. Each line of code was defensive because it couldn’t safely trust the results of lines before it. With this variation of the Null Object Pattern, we replaced edge cases with benign values. Once we did that, the boundaries became detangled so we could extract a safe version of jwt.verify().

Trust is a powerful refactoring tool. Today, look for try…catch statements, followed by if…else statements, and use the Null Object Pattern and rescueWith() to restore a culture of trust.

That’s it for today! If you loved today’s episode, please consider supporting the channel on Patreon. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>

How do you handle runtime errors without a mess of try…catch and if…else statements? Let’s see how higher-order functions and composition can help on today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

Last episode we saw how Custom Errors can often make our code worse, but Custom Exceptions can help by allowing intermediary functions to focus only on the feature’s happy path. If you’re just now joining us, hop back to the previous episode on Custom Exceptions.

Exceptions are useful when they eliminate if…else statements from calling functions, but at some point an Exception needs to be caught and handled, and that’s where the try…catch statement tends to make a mess of things.

Today we’re continuing to refactor a tiny chatbot we started a few episodes ago that helps outdoor enthusiasts find great trails to hike.

let chatbot = (message) => {

return viewHike(message);

};

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 💥 NotFound: lost lake

chatbot('show hike blue ridge');

// => 💥 ValidationError: show hike blue ridge

Like last time, our chatbot only understands one command, view hike. Most of the time this command replies with details about the hike, but when users ask for a hike that isn’t in the database or their syntax is a bit off, the viewHike() function will throw a custom exception like a NotFound error or a ValidationError.

In either case, the chatbot shouldn’t blow up and stop running, so we started by wrapping a try…catch statement around the problematic code.

let chatbot = (message) => {

try {

return viewHIke(message);

} catch (error) {

return `No such hike.`;

}

};

chatbot('view hike mirror lake');

// => 'No such hike.'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 'No such hike.'

But we quickly realized that every use of try…catch takes a substantial amount of boilerplate to keep from introducing a catch-all bug, like accidentally suppressing a ReferenceError.

To make sure we only rescued a particular error type, we introduced a simple utility called rescue(): a guard clause which rethrows the error if the type differs from what we intended to catch.

let chatbot = (message) => {

try {

return viewHIke(message);

} catch (error) {

rescue(error, NotFound);

return `No such hike.`;

}

};

chatbot('view hike mirror lake');

// => 💥 ReferenceError: viewHIke is not defined

let chatbot = (message) => {

try {

- return viewHIke(message);

+ return viewHike(message);

} catch (error) {

rescue(error, NotFound);

return `No such hike.`;

}

};

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 💥 ValidationError: show hike blue ridge

The problem with rescue() is that it only helps us catch one type of error at a time. So how do we handle both a NotFound error and ValidationError? We could make the rescue() function accept multiple error types, but then we couldn’t customize the fallback message based on the error type.

So do we have to give up the rescue() utility altogether and use cascading if…else statements to uniquely handle different error types? Maybe not if we factor a little further.

Our remaining try…catch boilerplate is starting to turn into an obvious pattern: if we were to reuse this try…catch in another part of the codebase, all that changes is the function to invoke, what type of error to rescue, and what to return if there is an error.

Let’s extract this formula into a function called swallow(), which takes the error type to swallow, a fallback function, and a function that will potentially throw an error.

let swallow = (type, fail, fn) => {

try {

return fn();

} catch (error) {

rescue(error, type);

return fail(error);

}

};

Now we’ll use swallow() to create a new version of viewHike() that is safe from NotFound errors.

let safeViewHike = (message) =>

swallow(NotFound, () => `No such hike.`,

() => viewHike(message)

)

;

let chatbot = safeViewHike;

It seems to work as before! But this code is still pretty verbose, and some might argue it’s more cryptic than simply writing a try…catch with cascading if…else statements. Well, if we just change the signature of swallow() a bit to take advantage of currying, we can eliminate a lot of the extra function calls and argument gathering.

-let swallow = (type, fail, fn) => {

+let swallow = (type) => (fail) => (fn) => (...args) => {

try {

- return fn();

+ return fn(...args);

} catch (error) {

rescue(error, type);

return fail(error);

}

};

let safeViewHike =

swallow(NotFound)(() => `No such hike.`)(

viewHike

);

Whoah, look at swallow() now! It’s a Higher-Order Function: it takes in an unsafe function that throws a particular kind of error, and returns a safe version of the function.

Because swallow() returns a function that is safe from the NotFound error type, there’s no reason we can’t pass that function into swallow() again to make it safe from a ValidationError too!

let safeViewHike =

swallow(ValidationError)(() => `Invalid format.`)(

swallow(NotFound)(() => `No such hike.`)(

viewHike

)

);

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 'Invalid format.'

That nesting is a bit nasty, but this is just the sort of thing the compose() utility is for:

let compose = (...fns) => x => fns.reduceRight((v, f) => f(v), x);

Instead of nesting swallow()s inside each other, we can list them out from top to bottom and feed the original viewHike() function at the very end. It works exactly the same way as manually feeding the results of each swallow() into the other, but it’s much easier to read and maintain.

let safeViewHike = compose(

swallow(ValidationError)(() => `Invalid format.`),

swallow(NotFound)(() => `No such hike.`),

)(viewHike);

This style of creating functions without first gathering and passing around all their arguments is called Point-free style, and it’s a big part of what makes functional programming so elegant.

It took us some time to arrive at this design, and many of the intermediate steps seemed a lot worse off than just using try…catch. But just like the Enforcer pattern we covered in an earlier episode, the best way to combine behaviors is through composition. Rather than cascading if-else statements, complex multiple error handling logic, or experimental catch syntax, we handled two kinds of errors through composition.

If you aren’t already in love with function composition, hang tight until the next episode: we’ll use error composition to put a functional twist on a popular Object-Oriented Programming pattern called the Null Object Pattern.

Today, look for try…catch statements in your codebase, and break down the parent function until you can replace the try…catch altogether with swallow(). And if you need to handle multiple error types, just layer them with compose().

That’s it for today. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>

Do you get spooked by runtime errors? They can be a pain to deal with, but we’ll see just how much solid error handling strategies can help in our crusade against if…else statements on today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

When you invoke a function, what might happen? Most of the time we get back a simple return value, but there’s another kind of result a function can produce: an Error.

An Error typically makes us think we did something wrong, but errors are just another feedback mechanism for a program, and unlike returning a value, throwing an Error has a peculiar superpower: it automatically propagates up the caller stack — interrupting the caller functions as it propagates — until it’s caught. This propagation behavior makes throw and try…catch statements a powerful control flow construct.

But handling errors correctly can quickly turn elegant functions into a hot mess of try…catch statements and nested if…else statements — exactly the sort of thing we’ve been obliterating in the last few episodes.

Today we’re working on a tiny version of the chatbot we started a couple episodes back that helps outdoor enthusiasts find great trails to hike.

let chatbot = (message) => {

return viewHike(message);

};

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 💥 NotFound: lost lake

chatbot('show hike blue ridge');

// => 💥 ValidationError: show hike blue ridge

We’ve cut down the chatbot code from the last couple episodes: it only understands one command, view hike, which shows details about a hike. But sometimes users ask for a hike that isn’t in the database or their syntax is a bit off. To simulate these edge cases, the viewHike() function uses a few custom error types:

class NotFound extends Error {

constructor(message) {

super(message);

this.name = 'NotFound';

}

}

class ValidationError extends Error {

constructor(message) {

super(message);

this.name = 'ValidationError';

}

}

viewHike() throws a NotFound error if the hike has the word “lost”, and a ValidationError if the format of the message is off.

let viewHike = (message) => {

let match = /^view hike (.+)$/.exec(message);

let hike = match && match[1];

return (

!hike ?

raise(new ValidationError(message))

: hike.includes('lost') ?

raise(new NotFound(hike))

:

`Details about <${hike}>`

);

};

Like return and continue, throw is a statement, so to use it in a nested ternary, we wrote a simple helper called raise().

let raise = (error) => { throw error; };

There’s a stage 2 proposal for an expression-friendly version of throw in the works, but until it lands it’s easy enough to make our own. So all told, the viewHike() function can result in one of two things: a return value, or a thrown Error.

Our chatbot is terse, but it already has some issues. We definitely don’t want the chatbot to blow up and stop running if a NotFound error is thrown, so let’s wrap the call with a try…catch statement to instead return a safe fallback message:

let chatbot = (message) => {

try {

return viewHIke(message);

} catch (error) {

return `No such hike.`;

}

};

chatbot('view hike mirror lake');

// => 'No such hike.'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 'No such hike.'

Wait, why is our chatbot always responding with “No such hike” now? That first command definitely worked before. Let’s comment out the try…catch statement to see what’s happening.

let chatbot = (message) => {

// try {

return viewHIke(message);

// } catch (error) {

// return `No such hike.`;

// }

};

chatbot('view hike mirror lake');

// => 💥 ReferenceError: viewHIke is not defined

chatbot('view hike lost lake');

// =>

chatbot('show hike blue ridge');

// =>

It looks like we were swallowing a ReferenceError. Well that would be a horrible bug to deploy to production!

We just made the cardinal mistake of error handling: a catch all. The try…catch statement will swallow any error — including errors we didn’t mean to catch.

It may sound obvious now, but just about any open source framework you’ve used probably has a catch-all bug in the codebase, from frontend frameworks like Ember.js to backend libraries like Passport and Jekyll. A catch-all ranks in the top 5 most frustrating bugs a library can make because it suppresses important errors unrelated to the library that the developer would otherwise see in the logs.

So it’s up to us to whitelist the type of error we want to handle, and otherwise rethrow it. Since we made custom error subclasses, we can use the instanceof operator to guarantee we’re catching an error we can handle. Otherwise, we’ll rethrow it.

let chatbot = (message) => {

try {

return viewHike(message);

} catch (error) {

if (error instanceof NotFound) {

return `No such hike.`;

} else {

throw error;

}

}

};

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 💥 ValidationError: show hike blue ridge

To rescue a ValidationError, we add another else-if case.

let chatbot = (message) => {

try {

return viewHike(message);

} catch (error) {

if (error instanceof NotFound) {

return `No such hike.`;

+ } else if (error instanceof ValidationError) {

+ return `Invalid format.`;

} else {

throw error;

}

}

};

chatbot('show hike blue ridge');

// => 'Invalid format.'

The chatbot is behaving well and not blowing up, but handling an error correctly looks awful. We definitely can’t leave these checks out, but a try…catch is a branching construct just like an if…else, so these are essentially nested, cascading if…else statements all over again. And we’ll have to repeat this boilerplate each time we need to handle an error correctly.

It really doesn’t seem like custom errors are making our code any better — in fact, it seems to be getting much worse!

That’s why you should never be too quick to sprinkle custom errors throughout your codebase. Because throw statements are fundamentally a control flow construct, they can often fight against everything we’ve been working towards in the previous episodes.

So when, if ever, should you use custom errors? Well, I prefer the alternative name “Custom Exceptions” because it tells us exactly when to use them: for unusual, exceptional cases that most of our codebase shouldn’t care about, like a NetworkError. These are cases that one or two functions in the codebase will handle with the same response: on the backend, a NotFound error thrown from any route should just generate a 404 response.

Used sparingly, custom exceptions can actually eliminate branching logic: since the rest of our functions can assume the happy path, they don’t need an if…else statement to check for an unusual return value, like a null check.

So a custom exception is worthwhile when it eliminates edge cases and if…else statements from calling functions, and throwing custom exceptions makes sense when the function would blow up anyway with a useless generic runtime error, like a TypeError.

Let’s see if we can find an error handling solution that cuts down if…else statements and common typos. Throwing an error triggers an early exit, even from a catch clause. Let’s shuffle the error checking code so it looks more like a guard clause:

let chatbot = (message) => {

try {

return viewHike(message);

} catch (error) {

if (error instanceof NotFound) {

throw error;

}

return `No such hike.`;

}

};

Now there’s nothing stopping us from extracting this entire guard clause into a function! Let’s call it rescue().

let rescue = (error, type) =>

error instanceof type

? error

: raise(error)

;

Now when using a try…catch, we just need to make sure we precede the catch code with rescue(). This behaves much better than what we started with, and it only added one line to our naive catch-all version.

let chatbot = (message) => {

try {

return viewHike(message);

} catch (error) {

rescue(error, NotFound);

return `No such hike.`;

}

};

chatbot('view hike mirror lake');

// => 'Details about <mirror lake>'

chatbot('view hike lost lake');

// => 'No such hike.'

chatbot('show hike blue ridge');

// => 💥 ValidationError: show hike blue ridge

Unfortunately, we can’t just stack invocations of rescue(), so how do we also handle a ValidationError? Hang tight and we’ll address this problem on the next episode of TL;DR. Till then, search for try…catch statements in your codebase and enforce good error handling practices with rescue().

That’s it for today. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>

How can you cut down small if-else statements that recur across several functions? Let’s cover another pattern for nuking if-else statements on today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

Over the past few episodes, we’ve been covering design patterns to help cut down the size and depth of if-else statements. If you’re new to this vendetta against if-else statements, hop back to the episode on nested ternaries to get up to speed.

Nested ternaries and the Router design pattern have helped us reduce the size and depth of cascading if-else statements, but we haven’t dealt with terse, non-cascading if-else statements that get copy-pasted across functions. These if-else statements often appear at the beginning of the function as a guard clause. They’re innocent and short, but like a weed they reproduce with each new feature, and the duplication is tricky to eradicate.

Today we’re continuing to work on a chatbot that helps outdoor enthusiasts find great trails to hike. This chatbot can respond to simple text commands, like list hikes, add hike and delete hike. If it doesn’t understand the command, it replies with a fallback message.

responder('list hikes');

// => 'Lost Lake, Canyon Creek Meadows'

responder('add hike Mirror Lake');

// => 'Added Mirror Lake!'

responder('delete hike Mirror Lake');

// => 'Removed Mirror Lake!'

responder('where is Mirror Lake');

// => "Sorry, I don't understand."

The code is a few steps forward from what we had last time: the responder function still follows the Router pattern, but we lifted the individual routes into functions to make the list of responses easier to read.

let hikes = [

'Lost Lake',

'Canyon Creek Meadows',

];

let listHikes = () =>

hikes.join(', ');

let addHike = ([hike]) => {

hikes.push(hike);

return `Added ${hike}!`;

};

let deleteHike = ([hike]) => {

hikes.splice(hikes.indexOf(hike), 1);

return `Removed ${hike}!`;

};

let fallback = () =>

`Sorry, I don't understand.`;

let responses = [

{ command: /^list hikes$/,

response: listHikes },

{ command: /^add hike (.+)$/,

response: addHike },

{ command: /^delete hike (.+)$/,

response: deleteHike },

{ command: /^(.*)$/,

response: fallback },

];

let responder = (message) => {

let { command, response } = responses

.find(({ command, response }) =>

command.test(message)

);

return response(

command.exec(message).slice(1)

);

};

The responder function searches through the list of responses for a command that matches the chat message, then invokes the corresponding response function.

let responder = (message) => {

let { command, response } = responses

.find(({ command, response }) =>

command.test(message)

);

return response(

command.exec(message).slice(1)

);

};

Today, we want to enforce that the add hike and delete hike commands are executed with the word “sudo” to prevent any accidental changes. Only some commands need sudo, and if the user forgets sudo, we want to provide feedback. So we can’t just add the word “sudo” directly to the regular expressions.

responder('list hikes');

// => 'Lost Lake, Canyon Creek Meadows'

responder('sudo add hike Mirror Lake');

// => "Sorry, I don't understand."

responder('sudo delete hike Mirror Lake');

// => "Sorry, I don't understand."

responder('where is Mirror Lake');

// => "Sorry, I don't understand."

We can make the regular expressions a little more lenient so the command is at least recognized:

let responses = [

- { command: /^list hikes$/,

+ { command: /list hikes$/,

...

- { command: /^add hike (.+)$/,

+ { command: /add hike (.+)$/,

...

- { command: /^delete hike (.+)$/,

+ { command: /delete hike (.+)$/,

...

];

But how should we enforce the use of sudo for these admin commands?

One tempting way to support a new, shared behavior like this is to add a new property to each response object: we’ll call it adminOnly.

let responses = [

...

{ command: /add hike (.+)$/,

+ adminOnly: true,

response: addHike },

{ command: /delete hike (.+)$/,

+ adminOnly: true,

response: deleteHike },

...

];

Then in the responder, we’ll add a guard clause that checks if the route requires “sudo”, and if the word is missing, we’ll respond with “Not allowed.”

let responder = (message) => {

- let { command, response } = responses

+ let { command, adminOnly, response } = responses

.find(({ command, response }) =>

command.test(message)

);

+ if (adminOnly && !message.startsWith('sudo')) {

+ return 'Not allowed!';

+ }

return response(

command.exec(message).slice(1)

);

};

When faced with this kind of feature request — that is, supporting a new behavior that can be generalized for related functions — many developers would probably do what we did and insert that behavior logic into the responder function. It’s quick, keeps the code DRY, and it just feels nice. But it’s also a premature abstraction that conflates responsibilities: the responder function has become responsible for routing and authorization logic.

Every time a feature requires a new qualifier, the responder will be edited. It won’t be long before there are several short if-else statements in the responder — which is precisely what the Router pattern was intended to help us demolish.

From a testing perspective, we can’t unit test the authorization logic for individual chat commands without going through the responder. We can only write integration tests for authorization.

Whenever you’re tempted to alter terse, single responsibility functions to incorporate a new behavior, take a step back and identify the most naive solution that still satisfies the single responsibility principle.

For example, what if we added this admin enforcement logic directly to the addHike() and deleteHike() response functions instead of the responder?

Let’s undo our changes. For the response functions to determine if sudo was used, we need to pass the full chat message:

let responder = (message) => {

...

return response(

- command.exec(message).slice(1)

+ { message,

+ match: command.exec(message).slice(1) }

);

};

In addHike(), we can add a guard clause that checks if the message starts with “sudo” and returns “Not allowed” if it doesn’t. We can copy-paste this guard clause to deleteHike().

let addHike = ({ match: [hike], message }) => {

if (!message.startsWith('sudo')) {

return 'Not allowed!';

}

hikes.push(hike);

return `Added ${hike}!`;

};

let deleteHike = ({ match: [hike], message }) => {

if (!message.startsWith('sudo')) {

return 'Not allowed!';

}

hikes.splice(hikes.indexOf(hike), 1);

return `Removed ${hike}!`;

};

This naive solution is feature complete and leaves the responder function focused on one responsibility. But now one if-else statement has multiplied into two in our response functions. So how are we any better off? Well, by letting the naive solution play out, we’re equipped to build an abstraction that solves a concrete problem: the duplicated guard clause.

This guard clause represents a behavior, which we could call adminOnly. When you hear the word “behavior” or “trait”, we’re referring to a cross-cutting concern that can be shared across several functions, even if they do completely different things. The addHike() and deleteHike() response functions have different jobs, but they share a similar behavior.

A great way to share behavior in a language that supports functional programming is through function composition.

Suppose we had a function, called adminOnly(), that receives an unprotected function like addHike(), and returns a new version of addHike() that enforces the use of the “sudo” keyword:

let responses = [

...

{ command: /add hike (.+)$/,

- response: addHike },

+ response: adminOnly(addHike) },

{ command: /delete hike (.+)$/,

- response: deleteHike },

+ response: adminOnly(deleteHike) },

...

];

adminOnly() is easy to code up once you get the parameter signature right. If the message contains the word “sudo”, it invokes the route it received as an argument. Otherwise, it returns the failure message.

let adminOnly = (route) => (request) =>

request.message.split(' ').includes('sudo')

? route(request)

: 'Not allowed!'

;

I like to call this kind of behavior function an Enforcer: it’s a Higher-Order Function with a guard clause that enforces some authorization rule, like requiring the word “sudo” or checking if the current user is an admin.

The add hike and delete hike commands behave exactly as they did in our first solution. But this time, we didn’t have to edit existing functions to support the new behavior: we only added new functions and composed them. It’s as though we’re writing immutable code, and like immutable data structures, this style of coding has great design benefits and prevents regressions. None of our existing unit tests will change, and the new code already follows the single responsibility principle.

We can even add new enforcement behaviors.

Suppose we want to enforce that the list hikes command include the word “please” with a new behavior called askNicely(). All we need to do is duplicate the adminOnly() behavior, then change the keyword and failure message:

let askNicely = (route) => (request) =>

request.message.split(' ').includes('please')

? route(request)

: 'You should ask nicely.'

;

let responses = [

{ command: /list hikes$/,

response: askNicely(listHikes) },

...

];

And because these enforcers are built through function composition, they layer without additional work. To make the delete hike command require “sudo” and “please”, we just compose the behaviors.

let responses = [

...

{ command: /delete hike (.+)$/,

- response: adminOnly(deleteHike) },

+ response: adminOnly(askNicely(deleteHike)) },

...

];

But what about the duplication between these behaviors? Other than a different keyword and failure message, they look exactly the same. We can DRY them up into an enforcer factory called requireKeyword() that returns a new behavior based on a customizable keyword and failure message.

let requireKeyword = (word, fail) => (route) => (request) =>

request.message.split(' ').includes(word)

? route(request)

: fail

;

Now the adminOnly() and askNicely() behaviors can be replaced with partial invocations of the requireKeyword() enforcer factory!

let adminOnly = requireKeyword('sudo', 'Not allowed!');

let askNicely = requireKeyword('please', 'You should ask nicely.');

We’ve landed on a solution that satisfies the single responsibility principle, didn’t change existing functions, and produces descriptive code.

responder('list hikes');

// => 'You should ask nicely.'

responder('please list hikes');

// => 'Lost Lake, Canyon Creek Meadows'

responder('add hike Mirror Lake');

// => 'Not allowed!'

responder('sudo add hike Mirror Lake');

// => 'Added Mirror Lake!'

responder('sudo please delete hike Mirror Lake');

// => 'Removed Mirror Lake!'

The enforcer pattern pops up in other places, like guarding authenticated pages in a React web app:

let requireLogin = (Component) => (props) =>

props.currentUser

? <Component {...props} />

: <Redirect to="/login" />

let ActivityPage = ({ notifications }) =>

<section>

<h2>Recent Activity</h2>

<Notifications notifications={notifications} />

</section>

export default requireLogin(ActivityPage);

Or rendering a loading indicator while an API request finishes:

let withLoader = (msg) => (Component) => (props) =>

props.loading

? <LoadingIndicator message={message} />

: <Component {...props} />

let ProfileScreen = ({ stories, user }) =>

<div>

<h2>Stories from {user.name}</h2>

<StoryList stories={stories} />

</div>

export default withLoader('Wait…')(ProfileScreen);

Or protecting backend routes based on the current user:

let listAllUsers = (req, res) => {

res.send(users);

};

let adminOnly = (req, res, next) =>

req.user && req.user.isAdmin

? next()

: res.sendStatus(401);

app.get(

adminOnly,

listAllUsers,

);

But we wouldn’t have discovered this pattern without writing the naive copy-paste solution first and letting the repetition guide the refactor.

So don’t try to prevent copy-paste prematurely: instead, let the code be duplicated, then DRY up the duplication through function composition. The naive copy-paste solution will lead you to a resilient abstraction that won’t be outgrown by the next feature.

Today, look for short, repeated if-else statements near the beginning of the function that guard the rest of the function, and try extracting them into an enforcer function.

That’s it for today. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>

This post originally appeared as a guest blog on Course Report.

Ah, books—the time-tested technique for ingesting knowledge. Programming literature may not be as engaging as Codecademy or CodeCombat, but it will help reinforce concepts and provide perspectives you’d be hard pressed to find in an online course.

Here are five books you should read as you begin your journey as a web developer. Keep in mind that these books won’t teach you to code, so they’re not substitutes for an online course or a coding bootcamp—but they are excellent supplements!

1. JavaScript Allongé

Thanks to frameworks like React and Elm, Functional Programming (FP) has made a huge resurgence in the development community. Yet very few developers understand the basics of Functional Programming beyond the .map() and .forEach() methods.

JavaScript is foremost a functional language, so you can stand out from the crowd of junior developers and improve your problem solving skills with an FP primer. The free JavaScript Allongé eBook is a fun and elegant way to learn the essential concepts of Functional Programming from a JavaScript perspective. You’ll be a shoe-in for React and Elm projects.

2. Grokking Algorithms

More individuals than ever are joining the developer workforce from a background outside of Computer Science. While you don’t need a CS degree to be a great developer, a grounding in Computer Science can foster a love of clever problem solving and design patterns to help you write smarter code.

It’s unlikely you’ll learn CS basics in a coding bootcamp, but you don’t need to sacrifice four years of your life and $150k on a degree!

Grokking Algorithms is a delightfully unpretentious introduction to hallmark algorithms in Computer Science. The easy-to-follow explanations and colorful illustrations have made it a favorite with my students and mentees.

This is a book you shouldn’t just read. Instead, I recommend scribbling on a whiteboard and pulling up a text editor to implement the algorithm as you read. Better yet, you can code it up with a Test Driven Development (TDD) approach: write tests first, then code up the solution to make the tests pass! Here’s an excellent introduction to TDD from the folks at dwyl.

Grokking Algorithms is surprisingly comprehensive, but if you find algorithms as fun as my mentees have, you’ll quickly run out. Vaidehi Joshi’s basecs project features illustrated guides to a growing list of algorithms, and was recently turned into a podcast.

3. JavaScript: The Good Parts

Stack Overflow has unintentionally become the go-to source for copy-pasting bad example code from JavaScript’s darker recesses. For a new web developer, it can be hard to determine which parts of JavaScript are good to use.

Douglas Crockford is one of the early titans in JavaScript development. His essential guide, JavaScript: The Good Parts, is the traditional book that “every JavaScript developer must read,” especially impressionable newcomers to the web community. As the title suggests, this book is not exhaustive—Crockford focuses only on the good parts of JavaScript, leaving older JavaScript features to rot in cobwebby corners.

4. You Don’t Know JS

On the other side of the spectrum, You Don’t Know JS is a free and fairly comprehensive book series for learning modern JavaScript in its entirety. It’s still not a replacement for a dedicated coding bootcamp, but of all the reads, it requires the least prior experience in web programming.

For hardcore bookworms, Kyle Simpson also sells a hard copy.

5. Mozilla Developer Network Tutorials

These books focus on core JavaScript and algorithms, but don’t specifically address programming in the browser. Mozilla Developer Network (MDN) has amassed an exceptional collection of guides for programming in the browser. Though not strictly a book, the guides are rich with examples and links to references for the many APIs built into web browsers. The MDN guides make a fabulous supplement to any web course or bootcamp!

And of course, MDN is the authoritative source for reference documentation on any browser web API.

Honorable Mention: Eloquent JavaScript

The third edition of Eloquent JavaScript is out, and I’ve heard nothing but praise from my students. And the online version is free! I haven’t yet read through it, so I can’t recommend it firsthand. But at a glance, I’m impressed by Marijn Haverbeke’s elegant teaching style for some fairly complex topics.

6. Books by Dead People

Reading is one of the best ways to cultivate your brain powers, so why stop with programming literature? We are continuously bombarded with the news and opinions of our time, but classic literature provides a chance to step back and glean wisdom from our collective past.

So read books by dead people! Here are some of my favorite classics:

- A Tale of Two Cities by Charles Dickens

- War and Peace by Leo Tolstoy

- Sense and Sensibility by Jane Austen

- Lord of the Rings by J. R. R. Tolkein

- Agatha Christie’s mysteries featuring Hercule Poirot

Pick good literature to fill your brain attic with. If the last time you read was in high school, delight yourself with just how many subtleties you missed in your English classes. You just might watch Netflix (a little) less thanks to your newly-developed craving for reading.

P.S. I wrote a book too! If you want to learn functional design patterns for Node.js backends, you can read a sample of my book, Functional Design Patterns for Express.js.

You can also check out TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week. We cover design patterns, refactoring and development approaches at the speed of vim!

How do you tame a group of if-else or switch statements that grows with every feature request? Let’s continue obliterating if-else statements on today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

Last episode we covered nested ternaries. Nested ternaries are a great replacement for if-else statements when we need the power of a conditional, but can afford to replace statements with expressions. But sometimes the number of cases just gets longer and longer.

Even if you extracted each case to a separate function, the function wrapping around the if-else or switch statement will continue to grow unbounded.

Especially in codebases that change hands often, this promotes a sloppy, inconsistent boundary between the individual cases and the mapping logic that decides which case to run.

Today we’re refactoring some code for a chatbot that helps outdoor enthusiasts find great trails to hike.

console.log( responder('list hikes') );

// => Lost Lake

// => Canyon Creek Meadows

console.log( responder('recommend hike') );

// => I recommend Mirror Lake.

console.log( responder('add hike Mirror Lake') );

// => Added Mirror Lake!

console.log( responder('where is Mirror Lake') );

// => Sorry, I don’t understand that.

So far this chatbot can respond to a few basic commands, like “list hikes”, “recommend hike”, and “add hike”. If you ask the chatbot something it doesn’t understand — like “where is Mirror Lake” — it responds with a fallback message.

At the moment, all of this logic lives in the responder function. Our chatbot currently has 4 behaviors, so there are 3 if-else cases and one fallback return statement.

let hikes = [

'Lost Lake',

'Canyon Creek Meadows',

];

let randomHike = () =>

hikes[Math.floor(Math.random() * hikes.length)];

let responder = (message) => {

if (message === 'list hikes') {

return hikes.join('\n');

} else if (message === 'recommend hike') {

return `I recommend ${randomHike()}`;

} else if (message.startsWith('add hike')) {

let hike = message.slice(9);

hikes.push(hike);

return `Added ${hike}!`;

}

return "Sorry, I don't understand that.";

};

This code is short right now, but that’s because our chatbot only supports 3 commands so far. It will need to understand many more commands, and each new command will add another if-else case.

Ballooning if-else or switch statements are a code smell that suggest the responder function might have too many responsibilities.

So how could we eliminate these cascading if-else statements before they grow to hundreds of cases?

Enter the Router. The Router is a design pattern that helps us turn a giant if-else or switch statement inside out by decoupling the responsibility of routing logic from the business logic of the individual cases.

The Router pattern is particularly nice because we can follow a step-by-step procedure to refactor the code, and at each step the code should still run.

The first step is to extract each case into a separate function and list them in a data structure, like a plain ol’ JavaScript object. Let’s move the code for the 3 chatbot commands into an object called “responses”, using the command as the key.

let responses = {

'list hikes': () =>

hikes.join('\n'),

'recommend hike': () =>

`I recommend ${randomHike()}`,

'add hike': (message) => {

let hike = message.slice(9);

hikes.push(hike);

return `Added ${hike}!`;

},

};

Now that we’ve moved each command into responses, we can replace the cases by looking up the appropriate response function and invoking it. At this point, our code should still work exactly as before.

let responder = (message) => {

if (message === 'list hikes') {

return responses['list hikes']();

} else if (message === 'recommend hike') {

return responses['recommend hike']();

} else if (message.startsWith('add hike')) {

return responses['add hike'](message);

}

return "Sorry, I don't understand that.";

};

We’ve finished the first step — it’s usually pretty mechanical, but it often spawns other refactors as you discover subtle side effects and hidden dependencies that need to be passed as an argument. For example, we quickly realized that the “add hike” command needs the rest of the chat message so it can extract the name of the hike.

Now for step 2: let’s collapse the cascading if-else statements. Since each response is listed with its corresponding command in the responses object, we can use the message to directly look up the appropriate response function.

let responder = (message) => {

let response = responses[message];

if (response) {

return response(message);

}

return "Sorry, I don't understand that.";

};

If a matching response function is found, we’ll invoke it. Also, since one of our chatbot commands needs the message, we’ll pass it as an argument. You’ll need to find a parameter signature that works for any of your response functions, so this may take some additional refactoring. But it’s okay if a response function ignores those arguments, as the “list hikes” and “recommend hike” commands do.

Nice, we collapsed a 3 case if-else statement into one! In step 3 we’ll eliminate the if-else statement altogether by extracting the fallback behavior into a function of its own. If no response function matched, we’ll use the double pipe operator to insert the fallback response. Now that we know the response variable will always contain a function, we can invoke it unconditionally.

let fallback = () =>

"Sorry, I don't understand that.";

let responder = (message) => {

let response = responses[message] || fallback;

return response(message);

};

And that’s it! The Router pattern helped us turn an if-else statement with several cases inside out. And now the responder function, which was destined to grow without bound, is a simple shell that just dispatches the message to the appropriate response function. In backend terminology, we call the responder function a “router,” and the commands are called “routes.”

Unfortunately, we broke the “add hike” command that expects the message to include the name of the hike after the command, so our simple property lookup isn’t flexible enough.

To fix this, we’ll convert responses to a list and use the find Array method to see which command the message starts with.

let responder = (message) => {

let [command, response] = Object.entries(responses)

.find(([command, response]) =>

message.startsWith(command)

);

return response(message);

};

Now that we’ve switched to startsWith, we can move the fallback code to the responses object, and use an empty string as the key! We just need to make sure it comes last. Now we’ve eliminated conditionals from the responder function entirely!

let responses = {

'list hikes': ... ,

'recommend hike': ... ,

'add hike': ... ,

'': () =>

"Sorry, I don't understand that."

};

See how control flow got replaced by a data structure? That’s a recurring theme in software design: many problems that are traditionally solved with algorithmic code can be described much more elegantly with a data structure, which is easier to debug, extend and reason about.

In the Router pattern, the mapping data structure doesn’t even have to be an object. We could turn the responses object into an array of objects, with one object per command!

let responses = [

{

command: 'list hikes',

response: () => hikes.join('\n')

},

{

command: 'recommend hike',

response: () => `I recommend ${randomHike()}`

},

{

command: 'add hike',

response: (message) => {

let hike = message.slice(9);

hikes.push(hike);

return `Added ${hike}!`;

}

},

{

command: '',

response: () =>

"Sorry, I don't understand that."

}

];

This format gives us flexibility: to define more complex commands, we can easily switch from strings to regular expressions, and even define capture groups for the response function to receive as an argument!

let responses = [

{

command: /^list hikes$/,

response: () => hikes.join('\n')

},

{

command: /^recommend hike$/,

response: () => `I recommend ${randomHike()}`

},

{

command: /^add hike (.+)$/,

response: ([hike]) => {

hikes.push(hike);

return `Added ${hike}!`;

}

},

{

command: /^(.*)$/,

response: ([message]) =>

`Sorry, I don't understand "${message}".`

}

];

let responder = (message) => {

let { command, response } = responses

.find(({ command, response }) =>

command.test(message)

);

return response(

command.exec(message).slice(1)

);

};

Not only did that simplify the code for “add hike”, but it provides new team members with a template for adding new commands. It’s pretty straightforward to add “where is” by using “add hike” as a starting point.

let responses = [

...

{

command: /^where is (.+)$/,

response: ([hike]) =>

`${hike}? Do I look like a GPS receiver?`

},

...

];

The Router pattern helps us discover common needs across if-else cases and provide a flexible interface to DRY them up. Because the routing logic is backed by a data structure, we can do things that were previously impossible with hard-wired if-else or switch statements, like dynamically enabling particular commands at runtime.

And with each case extracted into a function, we can unit test each response without going through the routing logic first!

The Router pattern helps solve the same problems in Functional Programming that polymorphism does in Object Oriented Programming. And it pops up everywhere: in React you might use this pattern to select which component to render, on the backend you could decide which handler function to invoke for a webhook, in a Redux reducer you can delegate state updates to smaller reducers, and of course on the backend you can define routes for a particular URL.

Today, scan through your codebase for switch and if-else statements that tend to grow with each feature request, and use the Router pattern to turn it inside out.

That’s it for today. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>

How many times does “else if” appear in your codebase? Let’s examine one way you can cut down on if-else statements in today’s episode of TL;DR, the JavaScript codecast series that teaches working web developers to craft exceptional software in 5 minutes a week.

Transcript

The if-else statement is probably the first control flow construct you learned as a programmer. And yet few things are as terrifying as diving into a legacy codebase swimming in nested, cascading if-else statements.

Code with fewer if-else statements is generally less complex because it has fewer edge cases that need to be tested, and code with fewer statements tends to have a more predictable program flow. A program without any if-else statements or other conditional constructs is incredibly straightforward to reason about, because data will always flow through the program in the same way, even when the inputs and output change.

Now it’s unlikely you could eliminate all if-else statements from a program without making readability worse off. But a lot of if-else statements is a code smell, because they unnecessarily increase the complexity and surface area for bugs.

So in the next few episodes of TL;DR we’ll cover some design patterns to cut down on if-else statements.

Today we’re examining some recursive code with several cascading if-else statements.

resolve({

user: {

firstName: 'Jonathan',

lastName: 'Martin',

favoritePlaces: () => [

'Alps',

'PNW'

]

}

});

/*

* Spits out:

*/

{

user: {

firstName: 'Jonathan',

lastName: 'Martin',

favoritePlaces: [

'Alps',

'PNW'

]

}

};

This resolve function walks through an object with nested objects, arrays and functions. Given a deeply nested structure like this, it returns a similar structure, but where the functions — like this one for the property favoritePlaces, which was originally a function — have been invoked and replaced with their return value.

Now the logic for the resolve function is pretty terse: if the current structure is an array or object, it recurses over the children. If it’s a function, it invokes it and recurses over the return value. Otherwise, it will just return the structure as-is.

let resolve = (node) => {

if (isFunction(node)) {

return resolve(node());

} else if (isArray(node)) {

return node.map(resolve);

} else if (isObject(node)) {

return mapValues(node, resolve);

}

return node;

};

Now these if-else statements aren’t complex per se, in fact it almost looks like it could be a switch statement instead. The problem is the testing conditions — that is, whether the data is a function, array or object — can’t be described with strict equality, which we would need to use a switch statement. Hence, we had to stick with if-else statements instead.

So if the test condition is too complex for a switch statement, is there an alternative that might at least move us away from if-else statements?

Well, the ternary operator is essentially an if-else expression. While an if-else statement runs statements but doesn’t return anything, a ternary expression evaluates and returns the value of one of the two expressions. Let’s write a new version of the resolve function and convert the first if-else case to a ternary: if the node is a function, the ternary evaluates to this case on the left, but otherwise it will evaluate to the case on the right, that is, the node. Like an if-else statement, only code in the matching case is evaluated — the other is completely ignored.

Because JavaScript developers don’t often see ternaries used in production codebases, there is a stigma that ternaries are brittle and have finicky syntax rules. But ternaries are actually more robust than an equivalent if-else statement because you can only embed expressions, and not statements. That makes it harder to sneak a side effect in, like setting a variable or forcing an early return.

The main frustration for many developers is reading another developer’s one-liner ternary, so it’s essential to space them out just like you would an if-else statement.

let resolve = (node) => {

return isFunction(node)

? resolve(node())

: node;

};

So instead of putting all this on one line, you should indent each case like this. You’ll find this convention popular in the React community for switching between components. With a little practice, a ternary becomes easier to read than the equivalent if-else statement.

But what about those cascading else-ifs we had before? Well since ternaries are just expressions, we can nest else-ifs in the last part of the ternary!

let resolve = (node) => {

return isFunction(node)

? resolve(node())

: (isArray(node) ? node.map(resolve) : node);

};

Well this is pretty awful to read, let’s fix that with some indentation. Ternaries are designed to cascade, so the parentheses are actually unnecessary. Next, let’s insert a new line after the question marks instead of before. Then unindent each line that starts with a colon so it lines up with the first line.

And for the final else case, the colon will be on a line by itself.

let resolve = (node) => {

return isFunction(node) ?

resolve(node())

: isArray(node) ?

node.map(resolve)

:

node;

};

Let’s practice reading this: if the node is a function, it returns the result of this line, otherwise if node is an array, it returns the result of this line, and finally if the node is neither a function nor an array, node is returned.

Wait a minute, we forgot to add a case for when the node is an object! Well to add it, we can just insert it before the final else.

let resolve = (node) => {

return isFunction(node) ?

resolve(node())

: isArray(node) ?

node.map(resolve)

: isObject(node) ?

mapValues(node, resolve)

:

node;

};

By formatting our ternaries like this, we can easily add and rearrange cases without changing other lines or fretting about nested parentheses!

And now that resolve is only one statement, we can drop the curlies and return keyword to make resolve an implicit returning arrow function. In this style, I like to unindent the testing conditions one more level. Now all of the test cases line up in one column, and all of the possible return values line up in another.

let resolve = (node) =>

isFunction(node) ?

resolve(node())

: isArray(node) ?

node.map(resolve)

: isObject(node) ?

mapValues(node, resolve)

:

node;

From a control flow perspective, we’ve achieved the holy grail: the resolve function has no variables, no early returns and no statements.

Now you might feel that this exercise of switching from if-else statements to ternary expressions was purely aesthetic, but syntax is just a nice side benefit of the real benefits:

Whereas if-else statements are popular in imperative programming, which is built on control flow, ternary expressions help us think about data flow and produce more declarative code. Functions with a lot of statements tend to have several entry and exit points that new team members need to parse through to keep from introducing a bug. But functions composed of expressions tend to flow in the same way for any inputs.

Today, look through your codebase for cascading if-else statements where each case is roughly the same, like returning a value or setting a variable, and try swapping the if-else for nested ternaries. And in the future, I would encourage you to default to nested ternaries, and make if-else statements the exception. You’ll find they force you to design your code better to begin with.

That’s it for today. Want to keep leveling up your craft? Don’t forget to subscribe to the channel for more rapid codecasts on design patterns, refactoring and development approaches.

]]>This post first appeared on the Big Nerd Ranch blog.

Sooner or later, your React web app will probably accept file uploads—perhaps to change out a user’s avatar or share images on a social site.

In modern browsers, the story for working with binary data is downright impressive thanks to objects like File, Blob and ArrayBuffer. You can even store large complex binary data directly in the browser with IndexedDB!

But working with binary data in a sandboxed tab is different from how a backend or native desktop app handles it. If you read in a 5MB image to a String, you will probably crash the tab. Read in 10 images simultaneously and you may crash the browser!

Luckily, JavaScript exposes natively implemented APIs to handle chunks of binary data. With some creativity, you can have the user’s browser pull its own weight, like resizing images on the front-end before upload. But before you create your own React-powered Hipstergram, it’s important to understand the performance implications of binary data in a web app.

Recap: File Objects and Blobs

The browser can’t directly access the file system for security reasons, but users can drop files into the browser with drag-and-drop.

Here’s a barebones React component that accepts a file, like an image:

let Drop = () =>

<div onDragOver={e => e.preventDefault()}

onDrop={e => {

e.preventDefault()

let file = e.dataTransfer.files[0]

console.log(file)

} }

>

...

</div>

Once the user drags-and-drops an image onto this <Drop> component, they probably expect to see a thumbnail-sized preview in the browser. The browser provides access to read in the file contents in a few formats like a String or ArrayBuffer, but each image could be 5 MB; drop 10 in the browser and you have 50 MB strings in memory!

So instead of directly returning a String or ArrayBuffer, the browser returns a Blob object. A Blob is essentially a pointer to a data source—it could point to a file on disk, an ArrayBuffer, streaming data, etc. Specifically, the e.dataTransfer.files array holds one or more File objects, which are Blobs with some extra metadata. File objects come with a few more properties, like the source file’s name.

To display the image in the DOM, e.g. with an <img /> tag, you can ask the browser for an ephemeral URL to the Blob object. This URL will only be valid while the tab is open:

...

let file = e.dataTransfer.files[0]

let url = URL.createObjectURL(file)

console.log(url)

// => "blob:http://localhost:3000/266c0711-76dd-4a24-af1f-46a8014204ff"

You can use a blob: URL wherever you would put any other URL—like http://localhost:3000/images/logo.png—and it just works!

The Trouble with “Just Rerender”

How do you use blob: URLs in React? Here’s a simple React app that accepts a dropped image and renders it on screen:

class App extends Component {

state = { file: null }

onDrag = event => {

event.preventDefault()

}

onDrop = event => {

event.preventDefault()

let file = event.dataTransfer.files[0]

this.setState({ file })

}

render() {

let { file } = this.state

let url = file && URL.createObjectURL(file)

return (

<div onDragOver={this.onDrag} onDrop={this.onDrop}>

<p>Drop an image!</p>

<img src={url} />

</div>

)

}

}

The App component starts without any file; when an image file is dropped onto the <div> element, it updates the state and rerenders with a Blob URL. Easy peasy!

But what happens if this component’s props or state changes? Let’s add a counter that changes 10 times a second:

class App extends Component {

- state = { file: null }

+ state = { file: null, counter: 0 }

+ refresh = () => {

+ this.setState(({ counter }) => ({ counter: counter + 1 }))

+ }

+ componentDidMount() {

+ this.timer = setInterval(this.refresh, 100)

+ }

+ componentWillUnmount() {

+ clearInterval(this.timer)

+ }

onDrag = event => {

event.preventDefault()

}

onDrop = event => {

event.preventDefault()

let file = event.dataTransfer.files[0]

this.setState({ file })

}

render() {

let { file } = this.state

let url = file && URL.createObjectURL(file)

return (

<div onDragOver={this.onDrag} onDrop={this.onDrop}>

<p>Drop an image!</p>

<img src={url} />

</div>

)

}

}

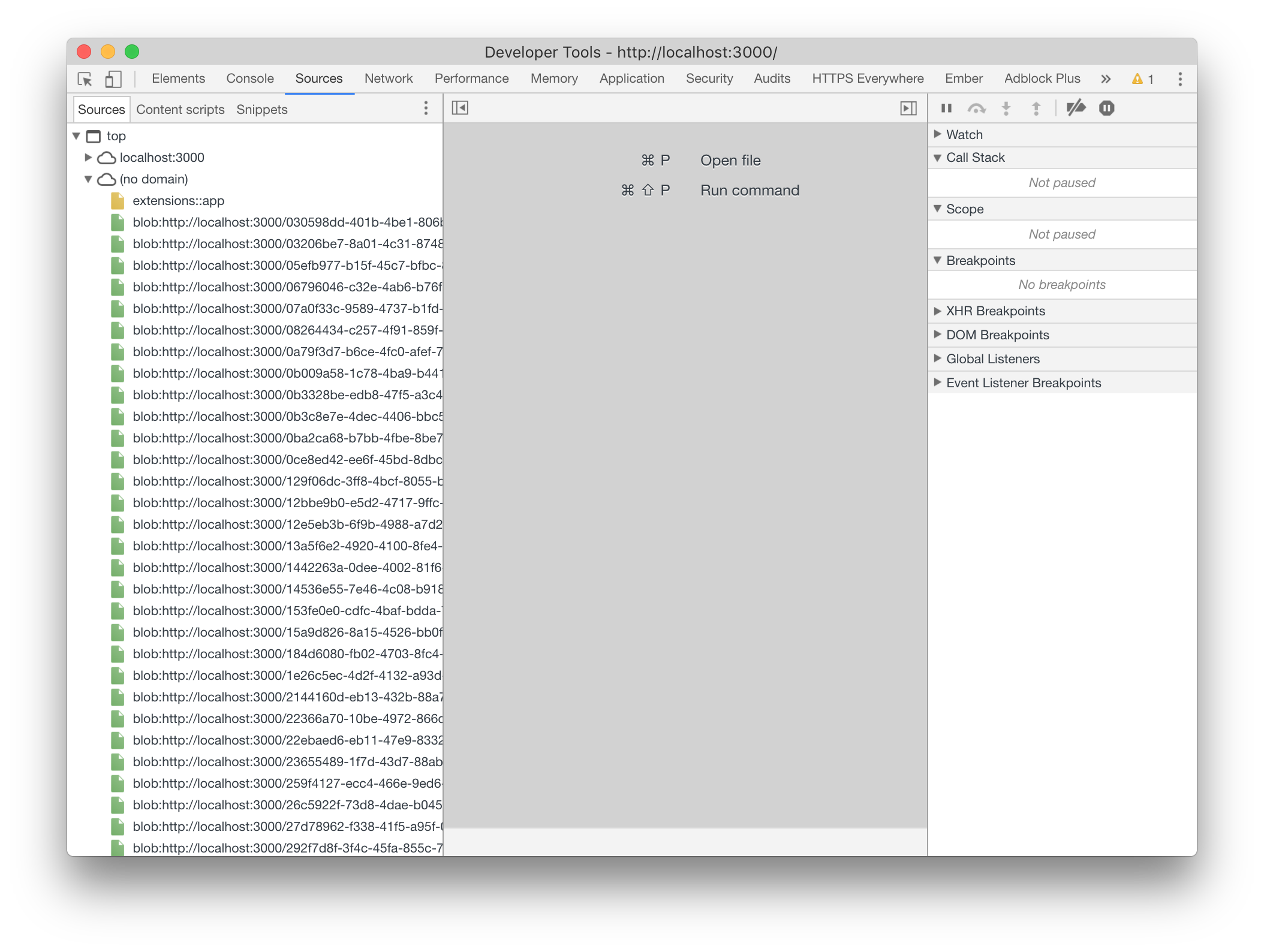

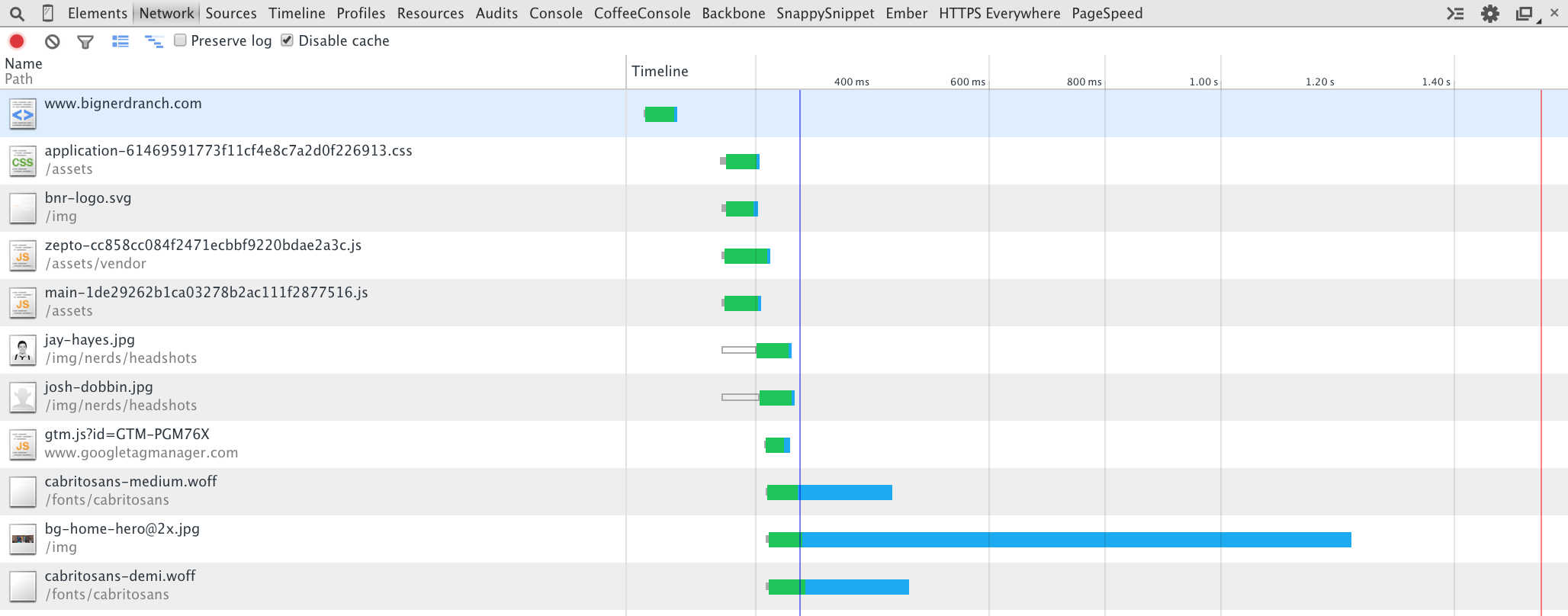

This forces React to rerender the <App> component 10 times a second. That’s fine since React is designed to handle this well, but there’s a problem: the blob: URL changes on every rerender! We can confirm this from the Sources panel in Chrome:

It seems the inline call to URL.createObjectURL() creates tons of extra blob: URLs that never get cleaned up: we’re leaking memory! Changing the URL every single rerender also causes the DOM to change, so sometimes the image will flicker since the browser’s caching mechanism doesn’t know the old and new blob: URLs point to the same image.

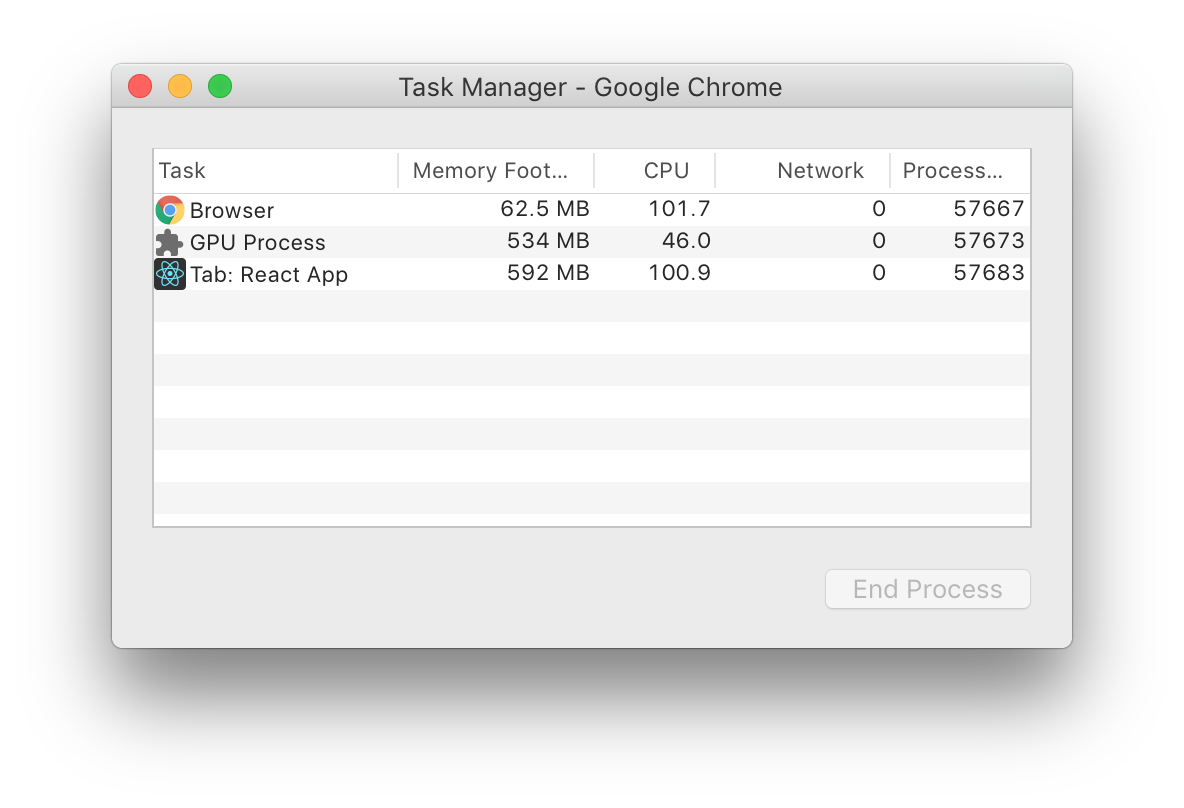

At a rerender rate of just 10 times a second, CPU usage explodes to an entire core and bloats memory usage. Eventually garbage collection will catch up, but at the cost of even more CPU usage.

Solution #1: Memoize in Class Component

For our trivial example, we can introduce an easy fix: just create the Blob URL once and store it in the <App> component’s state:

class App extends Component {

- state = { file: null, counter: 0 }

+ state = { url: '', counter: 0 }

...

onDrop = event => {

event.preventDefault()

let file = event.dataTransfer.files[0]

- this.setState({ file })

+ this.setState({ url: URL.createObjectURL(file) })

}

render() {

- let { file } = this.state

- let url = file && URL.createObjectURL(file)

+ let { url } = this.state

return (

...

)

}

}

That totally works, but only if you plan to do nothing else with the data. After the file is dropped, you will likely need to pass the original Blob object around to other React components, perhaps to store it in IndexedDB or upload it with FormData.

Solution #2: It’s Just an Object, Add a Property!

What if we just passed around the immutable Blob object, but added a url property to it with the memoized Blob URL?

class App extends Component {

...

render() {

let { file } = this.state

- let url = file && URL.createObjectURL(file)

+ let url = file && blobUrl(file)

return (

...

)

}

}

let blobUrl = blob => {

if (!blob.url) {

blob.url = URL.createObjectURL(blob)

}

return blob.url

}

That one change brings down CPU usage to near zero! But… we violated a design principle by modifying an object—the Blob object—from an API that we don’t own.

Solution #3: Global Variable

What if we passed around the Blob object, but instead of modifying it, we stored the generated Blob URL in a big lookup table that only the blobUrl() function can access?

Sounds like a global variable, right?

let hash = file => `${file.name}:${file.type}:${file.size}`

let urls = {}

let blobUrl = blob => {

let key = hash(blob)

if (!urls[key]) {

urls[key] = URL.createObjectURL(blob)

}

return urls[key]

}

It’s a great idea, but difficult to execute because the keys in a Plain Ol’ JavaScript Object must be strings, so we can only make a best effort at creating a collision-resistant key per Blob object.

While this will likely work for File objects, it won’t do for Blob objects: they don't have a .name property, so the likelihood of a key collision would be much higher.

The only real way to create a unique hash per Blob object is to tag each Blob object with a unique ID, but then we’re back to modifying the Blob object. However, we’re on the right track.

Solution #4: ES2015 Maps

We need a map type that accepts objects as keys. The POJO won’t do that, but the Map datatype introduced in ES2015 will! Each object has a unique identity because it has its own pointer (place in memory). The Map datatype uses that pointer as the key, so entries are guaranteed to be collision-free!

let urls = new Map()

let blobUrl = blob => {

if (urls.has(blob)) {

return urls.get(blob)

} else {

let url = URL.createObjectURL(blob)

urls.set(blob, url)

return url

}

}

Boom! But we introduced a subtle problem: we’re leaking memory.

That’s right! In JavaScript we normally don’t manually manage memory, but that doesn’t “free” you from thinking about memory management!

JavaScript employs several strategies and heuristics for efficient garbage collection (like reference counting and generational garbage collection), but we can assume that objects are garbage collected when they are no longer “reachable.”

The urls local variable is in scope and reachable during the app’s entire lifetime. All keys and values in a Map stick around explicitly until removed. So unless we explicitly delete entries from the Map, the Blob objects and blob: URLs will always be reachable—they’ll never be garbage collected. We’re leaking memory!

Solution #5: ES2015 WeakMaps

What if we had a Map datatype that doesn’t prevent the keys from being garbage collected, and automatically deletes the key-value pair once the object becomes unreachable?

That’s precisely what a WeakMap does! It allows us to associate data with an object, but without modifying the original object. A WeakMap behaves like weak references do in Swift and Objective C. Think of them as a noncommittal friend: “If no one needs you, neither do I.”

-let urls = new Map()

+let urls = new WeakMap()